CHC Omeka Assets and Management

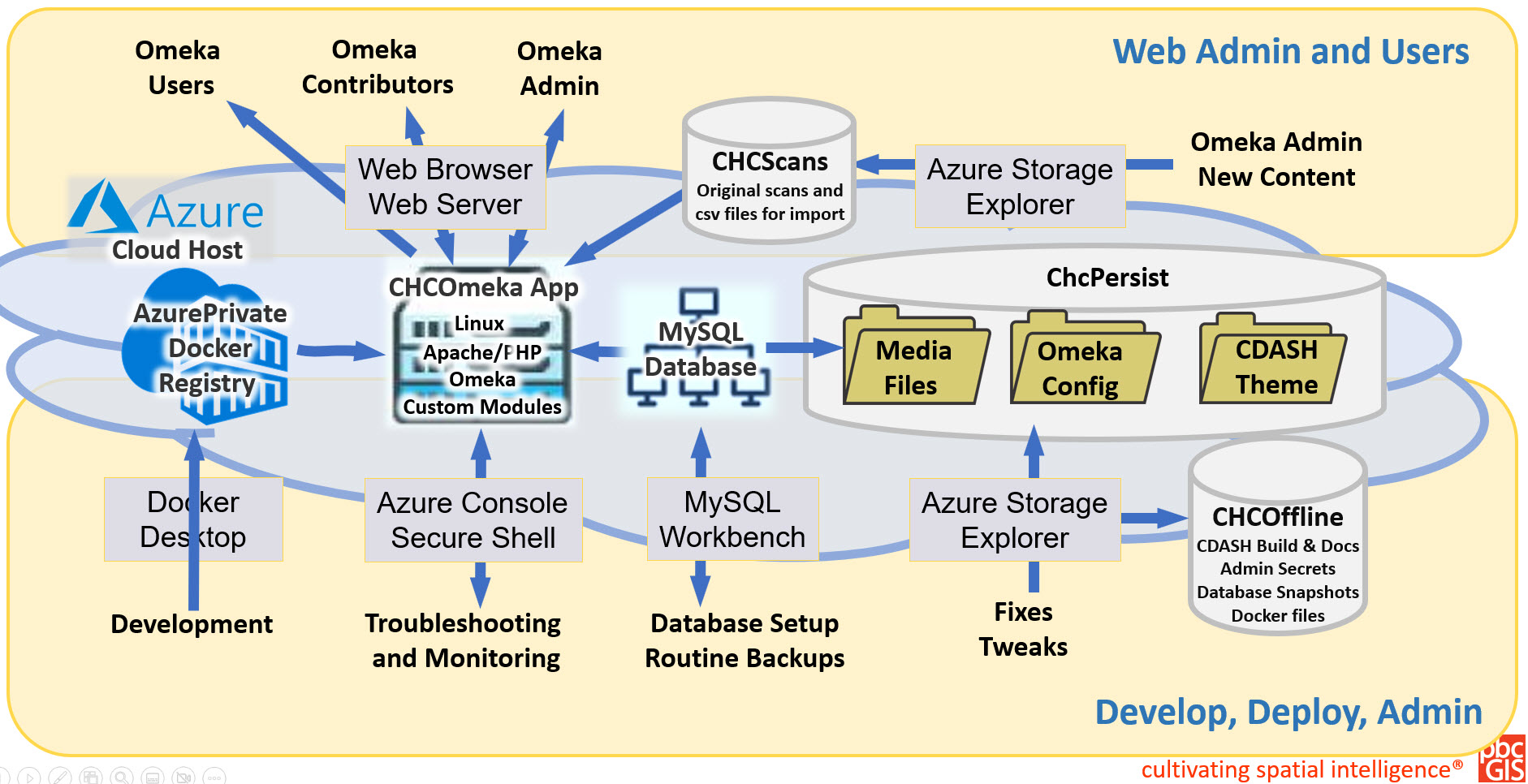

The deliverables from the CDASH project include a Containerized Web Application that can be deployed on a web services provider like Amazon or Azure. The same container architecture can us used to install a complete instance of CDASH on a local computer for development purposes.

About Names: You will notice that we prefix certain file names and service names with CHC rather than CDASH. This is because we can think of CDASH is one collection within the Historical Commission's greater Omeka installation. Even though CDASH is the first and only collection at the moment, it is foreseeable that the CHC's Omeka installation may eventually host other collections.

Topic Index

- Containerized Web Application

- The CHC Omeka Application Stack

- Off-Line Files

- MySQL Data

- Persistent Application Files

- Scan Batches

CDASH Is a Containerized Web Application

One of the recent miracles of cloud computing is the Containerization of web applications. A container in this sense is similar to what we used to call a "server." Whereas servers used to be actual computers, they now may be implemented as collections of code that act like a server.

Because of containers, we are able to have a web server and a database server all speaking with each other as if they were on the cloud with their web interfaces run on whatever computer we want. We can run the container on a desktop or laptop computer -- for experimenting with changes. When we are happy with the way it works we can deploy the tested container to the cloud (in our case the city's cloud host, Azure.)

Easy to Set-Up Development Versioning

Because containers encapsulate the entire server and data structure of a web application it means that we can have a development environment that is the same as a the production server -- except for the tweak that we make to it. Our development environment is a safe sandbox to try and to test. When we have perfected our upgrades, they can be implemented on the production server.

Docker Resources and setup

You can have all of the resources that make up the C-Dash application at the C-DASH GitHub repository, pbcGIS-cdash-dev. We will describe it more detail later. For now, download the whole repository to your computer, install Docker and then run these commands:

The CHC Omeka Stack

Web applications, like CDASH are often described as a Stack of code. The stack idea is helpful for understanding CDASH as a sort of pyramid of very common tools with some particular mofications near the top that define the particular features that are unique to CDASH.

- Host (Local or Cloud)

- Container Image (Docker)

- Operating System (linux)

- Web Server(Apache)

- Web Development Framework (PHP)

- Database Server (MySQL)

- Asset / Content Management Application (Omeka-S with additional Modules)

- CDASH Customizations

Every element of this stack is made of free software that is reliable and has its own documentation. The CDASH Developer is assumed to be able to figure out all of those pieces. This documentation is going to point out the CDASH Customizations as a crib-note for the developer. This will also be useful information for the CDASH Administrator and Owner roles, since these customizations are among the CDASH assets that, if lost, could mean the death of the project.

Owner and Administrator Viewpoint: CDASH Project Assets

CDASH assets are files that are necessary to build or re-build the CDASH project. At first you can think of the initial state of CDASH with no items as an web server and empty Omeka instance with the CDASH customizations applied.

CHC-Offline Files

The CHC-Offline folder contains all of the information necessary for building the CDASH application from scratch. The folder is also used to hold backups from the Omeka database taken at strategic times

- Docs: Configuration information for the Azure host including essential information for restarting, troubleshooting backing up and restoring the container with CDASH customizations and assets.

- CDASH-Prod-Docker: The latest Docker configuration and related resources for the CHC Omeka installation

- Vocabulary: Custom CDASH vocabulary export/import files

- CSVImportPlus: Development repository for the CDASH custom CSV Import module.

- Pages: HTML exports of pages that were created for CDASH.

- DBExports: database exports -- including a copy of the Omeka table-space that can reconstruct an empty CDASH instance and backups of the Omeka tablespace taken at intervals.

Note that a copy of the CHC-Offline files can be downloaded from GitHub. Because several files in this repository contain dummy passwords, the CHC Omeka administrator should keep her own copy of this file system that contains passwords for the production server. Keeping a local copy of CHC-Offline as weall as an Azure file share and on your local computer could be a life-saver in case something happens to the Azure files.

Soft Delete Protection: 14 days.

The Initial Docker Application and Accumulated Data and Tweaks

The resources in the Docker folder contain scripts and resources that create a linux server with a generic file-system and the additional program files to create an Omeka server that is empty. The Docker scripts produce a filesystem image that is stored on the Docker host. This image can be started and stopped and reloaded to restore the initial state of the application. This is very handy for being able to rebuild the server when the host shuts down or if we need to scale it up or move it.

But what about the data we add and the tweaks that we make to our application? We don't want these to be wiped out every time we load the image.

There are a lot of things that we do to our Omeka installation that we want to accumulate and not be wiped out everytime our container image reloads. These data accumulate in the Omeka Tablespace in MySQL database and in the subfolders CHC_Persist fileSystem.

The Omeka Tablespace in MySQL

Everything we see when we load a page in Omeka comes from static information about how to create pages included with the initial Omeka installation plus dynamic data that is pulled from tables the MySQL database. Information such as all of the settings and configuration that are made to Omeka, including customized vocabularies and resource templates that are created for the CDASH application, and information about items and media are all stored in these tables.

During routine use and administration of Omeka, it is unnecessary to think about the Omeka tablespace in MYSQL. However, if it ever becomes necessary to move the Omeka installation with its accumulated items and configuration, it is necessary to understand that much of the information you will need will be in this tablespace.

Backup and Recovery

When we set up the CDASH application for the first time, we save a copy of the initial tablespace that includes all of the CDASH customizations. Part of the configuration of our Azure host includes routines for taking backups of the data tables every day. These backups are deleted after 30 days.

CHCPersist Files

Some of the data and configuration and interface tweaks that accumulate as we work with our Omeka applications are stored as files. To protect these from being re-initialized when the CHC-Omeka image is reloaded, they are stored in a persistent file-system, CHC-Persist. These files include:

- Files: A directory that contains copies of the original media files, which have been renamed with unique ID strings to match references in the database. This folder also contains the Large Medium and Small thumbnail images that are produced for each image as they are imported.

- Config: Config files contain settings that are read by Omeka on-the-fly. These configuration options contain such things as the size of the thubnail images and the connection strings used to connect Omeka to the database. The default passwords and connection information that is set in the GitHub versions of these files are for local development. The CDASH administrator should have her own copy of the chcpersist file-system that contains the secret passwords that are used in production.

- CHC-Scans: A folder that contains batches of scanned documents. Each batch containing the initial metadata for the related document items. These batches are discussed in greater detail on a forthcoming page about importing items.

- Modules: CDASH depends on several Omeka modules. They are included in this folder so that they will be available on the development instance. When preparing to deploy the production instance, this modules folder should be zipped and included in the CDASH-Docker-Prod folder where it will become part of the docker image. This is for performance reasons.

The storage account for chcpersist is set up to allow for recovery of accidentally deleted files for 30 days.

CHC-Scans Files

CDASH Items and their associated media files are imported into Omeka through a modified version of the Omeka CSV Import module. The process described in a forth-coming page in this manual. Batches are uploaded as zipped folders that contain the scanned documents and the CSV files that create the associated items with initial metadata. To import the items, the batch folders are unzipped. Once the items are created, the unzipped batch may be be deleted. The zipped batches are retained on the Azure storage. They can be useful if there was ever a need to re-import the items. We presume that the batches are also stored on a local file-system in the CHC offices.

CHC-Scans is set up as an Azure Cold Storage tier which allows quick access but cheap long-term storage.

After some experience we may re-think the necessity of keeping these files in the cloud.

For the development version of CHC Omeka, the CHCScans folder is kept as a sub-folder of the CHCPersist file system.